Introduction

Why Nodes?

Engineers love programming languages. Analysts prefer SQL. Business people are fond of visual tools.

It’s a bit tricky to communicate when teammates speak and think in different ways. Especially in a fast moving world where people change teams, new challenges arise daily, and the environment is transforming permanently. If any change takes weeks you are behind.

Fabrique.ai Nodes are created for teams to deliver an intuitive lightweight approach to easily build, run, maintain, reuse, extend, and share event flow processing projects. No code is needed.

Node Collection is limited for purpose by a dozen of typical operations to cover the most common scenarios and to shorten the learning curve. At the same time, the Node Collection is extendable and new functionality can be added without friction.

Node

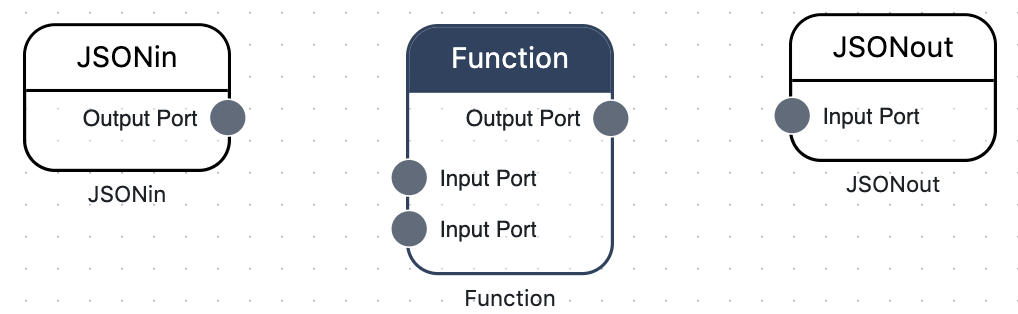

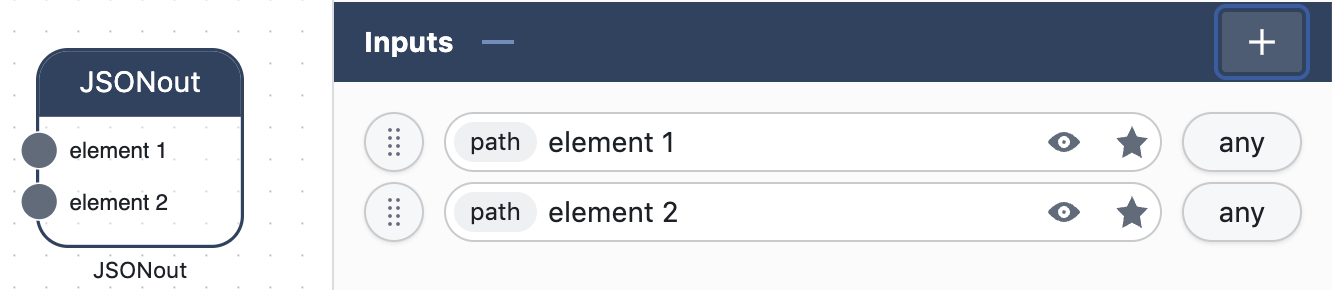

Nodes have Input and Output Ports.

Node gets values to the Input Ports, executes the processing logic, and transmits the resulting values to the Output Ports.

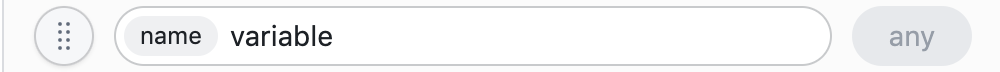

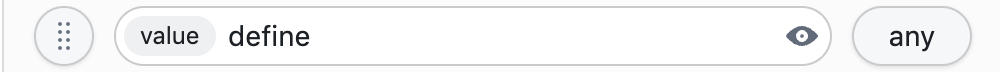

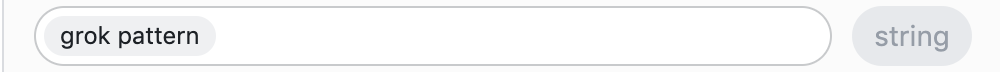

Port has the input field to specify the operation with the value and the label in the input field to prompt the operation.

Input and Output Ports, depending on a Node, may operate with the values of a different type in a different manner.

For example, query path, apply formula, name variable, define value, apply pattern, write code etc.

Port may require to specify the type of data.

For example, integer, number, boolean, string, object, array, or any.

Input and Output Ports can be combined into the Port Group.

For example, unnamed, condition, if condition, else.

Nodes can have default, obligatory, or optional Ports.

Ports can be added, deleted, or hidden, if the processing logic of a Node allows it.

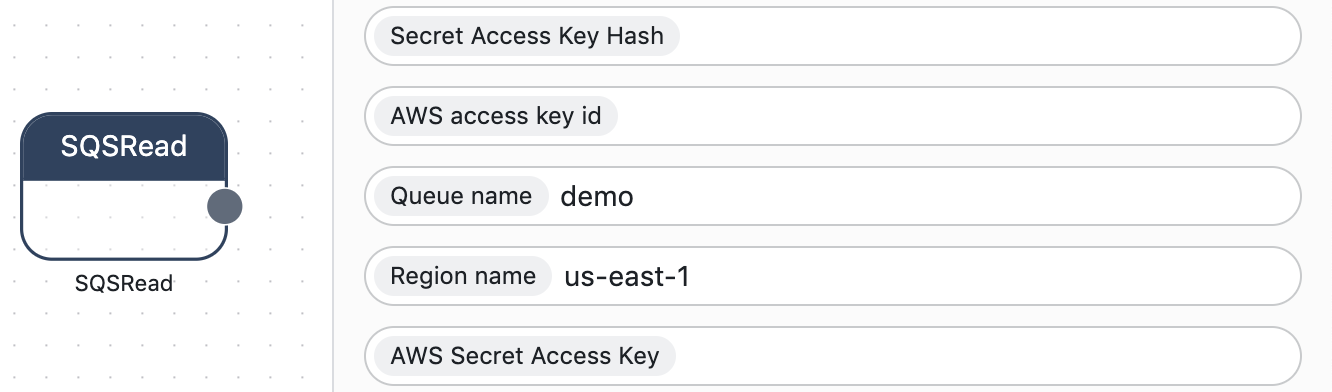

Nodes may require special configuration parameters and encrypt sensitive credentials before storing.

Nodes may verify configurations to minimize the risk of errors.

Nodes produce logs and error messages to help detect errors in Runtime.

Fabrique provides the SDK to extend the Node Collection and the Nodes functionality.

Node Collection

Nodes are divided into 6 groups.

The division into Groups depends on the specifics of the Nodes.

Conditional Nodes

Filter → gets

valuesto the Input Ports andtransmitsthevaluesto the Output Ports without any change if thevaluein theconditionalPortis_true, otherwisedoes not transmit.If-Else → gets

valuesto the Input Ports, andtransmitsthevaluesto theif conditionOutput Ports without any change if thevaluein theconditionalPortis_true, otherwise to theelseOutput Ports.

Functional Nodes

Function → gets

valuesofvariablesto the Input Ports, appliesfunctionsto thevalues, and sends theresultsto the Output Ports.Random → gets a

triggerto the Input Port, applies arandom functionfrom the collection, generates arandom value, and sends thevalueto the Output Port.

In/Out Nodes

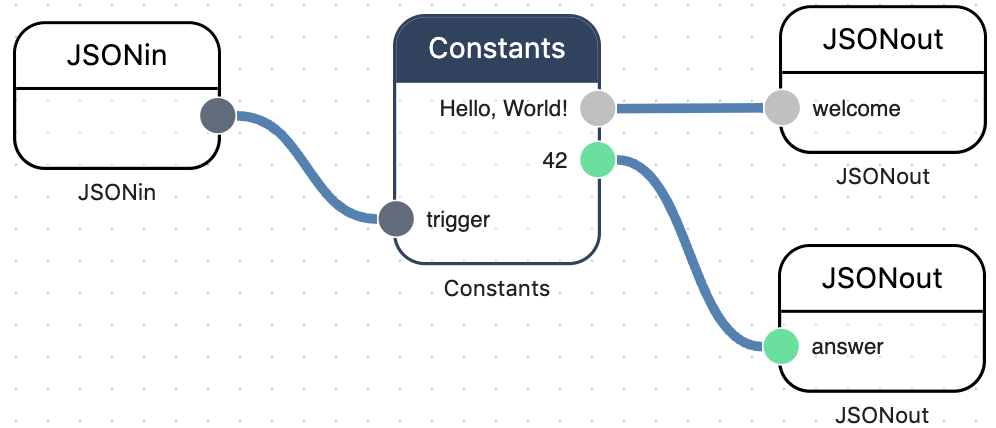

JSONin → reads

JSON messagesfrom the Input Topic, deserializes theJSON messages, decomposesJSON objectstoelements(except arrays), getsvaluesof theelements, sends thevaluesof theelementsto the Output Ports.JSONout → gets

valuesto the Input Ports, composes thevaluestoelementsandobjectsofJSON messages, serializes theJSON messages, writes theJSON messagesto the Output Topic.SQSWrite → gets

valuesto the Input Ports, composesJSON messages, serializesJSON messages, connects to the AWS SQS PubSub service, publishes theJSON messagesto the Queue.SQSRead → connects to the AWS SQS PubSub service, subscribes to the Queue, consumes

JSON messages, deserializes theJSON messages, decomposeselementsandobjectsof theJSON messages, sends thevaluesof theelementsandobjectsto the Output Ports.Timer → triggers

periodic eventshaving atimestampto the Output Port.

Modifying Nodes

Constants → gets a

triggerto the Input Port, declaresvaluesofelements,objectsandarrays, sends thevaluesto the Output Ports.Parser → gets a

String valueto the Input Port, appliespatterns, automaticallygeneratesthe Output Ports, extractsvaluesin accordance with thepatterns, and sends thevaluesto the Output Ports.TypeConverter → gets a

valueofone typeto the Input Port, modifies thevaluetoanother type of data, and sends themodified valueto the Output Port.REST GetClient → gets a

triggerandparamsto the Input Ports, requests the external REST API Server, getsresponsefrom the REST API Server, sends theresponseto the Output Ports.REST PostClient → gets a

triggerandparamsto the Input Ports, requests the external REST API Server, sends theparamsto the REST API Server, sends theresponseto the Output Ports.REST PutClient → gets a

triggerandparamsto the Input Ports, requests the external REST API Server,updatesthevalueson the REST API Server, sends theresponseto the Output Ports.REST DeleteClient → gets a

triggerandparamsto the Input Ports, requests the external REST API Server, sends therequest on deletion, sends theresponseto the Output Ports.

Stateful Nodes

DictionaryWrite → gets

valuesfor thekey-value pairto the Input Port, looks up for thekeyin the Dictionary Collection, writes thevaluesof thekey-value pairto the Dictionary Collection, returns astatusof writing.DictionaryGet → gets a

valueof thekeyto the Input Port, looks up for thekeyin the Dictionary Collection, gets thevaluefor thekey, sends thevalueto the Output Port.DictionaryDelete → gets a

valueof thekeyto the Input Port, looks up for thekeyin the Dictionary Collection, deletes thekey-value pairfrom the Dictionary Collection, sends theboolean statusof deletion to the Output Port.WindowWrite → gets a

timestampto the Input Port, applies anaggregation functionto the time window that matches the timestamp, appends theaggregateto the Window Collection, and sends thewindow timestampto the Output Port.WindowRead → gets a

timestampto the Input Port, requests thecounted aggregatesfrom the Window Collection, and sends thevaluesof the selected aggregates to the Output Ports.

Structural Nodes

ArrayToElement → gets an

arrayto the Input Ports, iterates thearray, extractselementsfrom thearray, transmitselement by elementto the Output Ports.ArrayToArray → gets an

arrayto the Input Port, applies agroup operationfrom the collection to theelements of the array, and sends themodified arrayto the Output Port.ElementToArray → gets

value by valueofelementsof anarrayto the Input Port, composes theelementsto thearray, sends thearrayto the Output Port.Decompose → gets a

nested objectas avalueto the Input Port, decomposeselementsfrom theobject, and sends theelementsto the Output Ports.Compose → gets

valuesto the Input Ports, composes thevaluestoelementsandobjectsofJSON structure, and sends theJSON structureto the Output Port.

Node Graph

The Output Ports of one Node can be linked with the Input Ports of other Nodes.

Linked Nodes form a direct acyclic synchronous computational graph.

Node Graph is an intuitive way to build, test, reuse, and explain event processing logic.

Node Graph allows to realize quite different processing logic like consume messages from an external service, filter messages, check conditions, split a flow of events into multiple sub-flows, flatten a nested structure, enrich messages, collect aggregates, detect anomalies etc.

Node Graph allows processing a micro batch of messages at once.

All Ports have to be linked, hidden, or deleted. Unlinked Ports are not allowed.

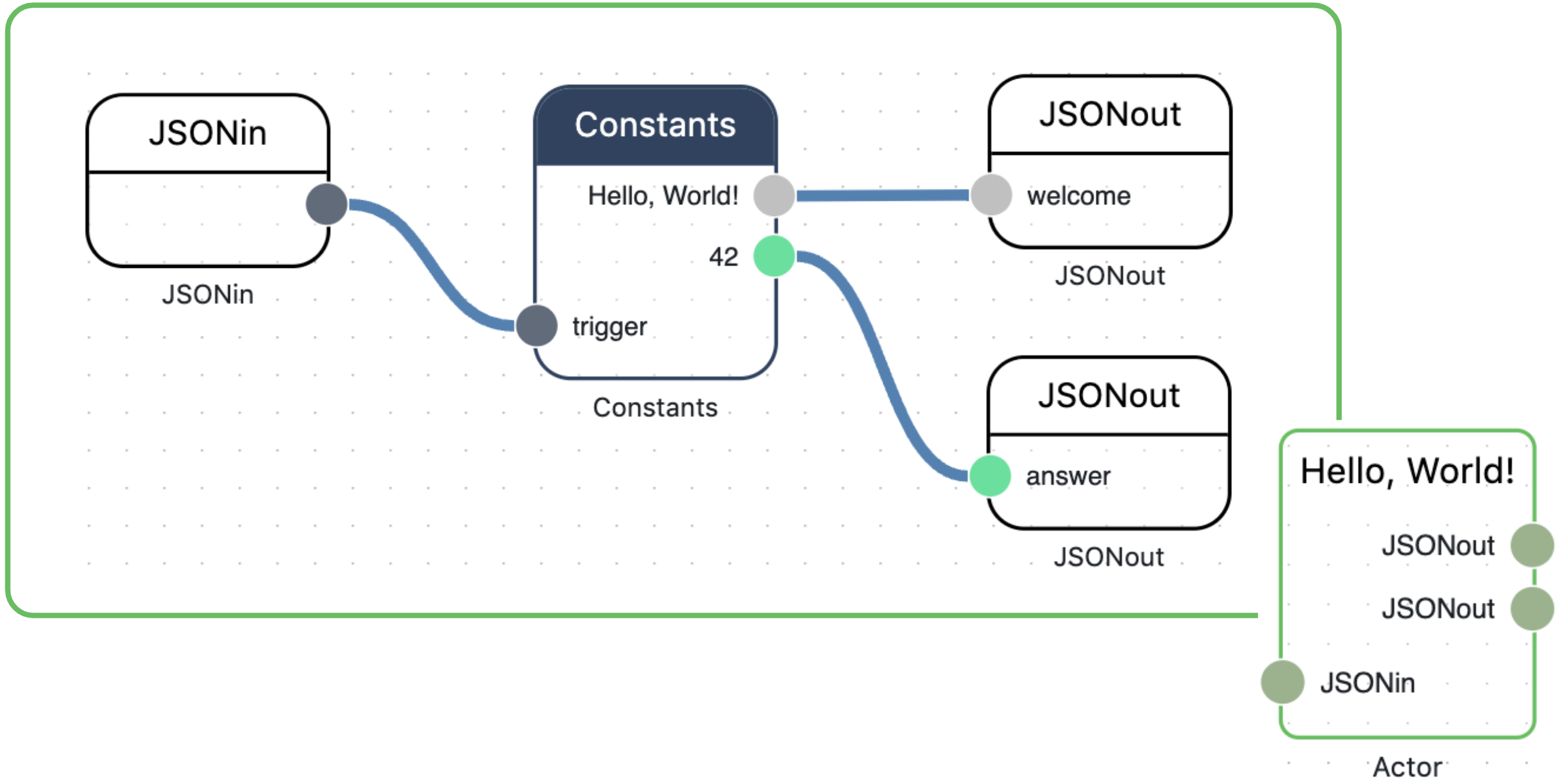

Actor

Instead of building a Monster Node Graph it’s much more practical to decompose a complex processing logic into multiple stand-alone Simple Node Graphs.

The rule of thumb - make Node Graphs as simple as possible.

Simple Node Graphs allow to atomize and isolate the processing logic, computational resources, error handling, testing, collaborative work etc.

Simple Node Graphs are isolated from each other by Actors.

Actor is a microservice that can be deployed and maintained independently, paralleled by multiple instances, provided with required computational resources.

Topic

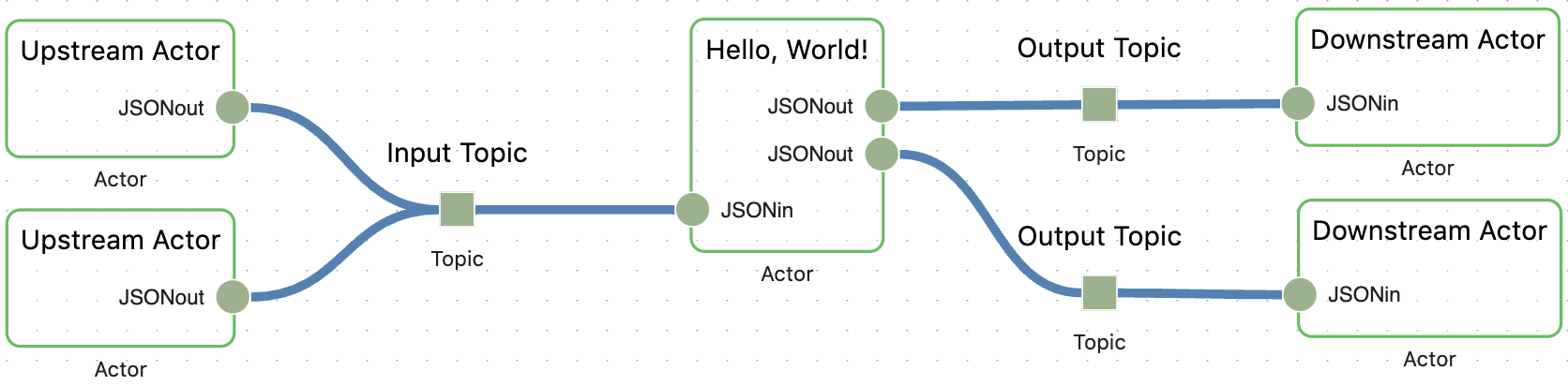

Actors consume input messages, execute Simple Node Graphs, and produce output results.

If an Actor consumes messages from an upstream Actor, or produces messages to feed a downstream Actor, then a Topic is required to link 2 or more Actors.

Actors are not allowed to be linked directly.

Topics link Actors asynchronously to unbind the execution of Simple Node Graphs.

Topics and Actors allow to build complex directed asynchronous cyclic computational graphs.

Actors employ the special JSONin and JSONout Nodes from the In/Out Group to link input and output Topics respectively.

Actors may consume messages from multiple Input Topics and produce messages to multiple Output Topics.

Once processed a message is deleted from a Topic by retention time.

State

Besides messages Actors may employ shared States.

One Actor may collect aggregates. Another **Actor may consume aggregates independently.

Nodes from the Stateful Group are used to write, read, update, and delete records in collections.

One Actor may operate with multiple collections.

Once created or updated, a record is retained until deleted on command or by retention time.

Project

Actors, Topics, and States form a Project.

Projects have Versions.

Once saved a Project gets a new Version.

Version of a Project can be Deployed in Runtime.

A version of a Project can be Duplicated, Shared or made Public.